Technology

How trolls, court cases and layoffs resulted in a ‘accept as true with and protection wintry weather’ for incorrect information forward of election

Nina Jankowicz, a disinformation skilled and vp on the Centre for Data Resilience, gestures all the way through an interview with AFP in Washington, DC, on March 23, 2023.

Bastien Inzaurralde | AFP | Getty Photographs

Nina Jankowicz’s dream activity has changed into a nightmare.

For the age 10 years, she’s been a disinformation researcher, learning and examining the unfold of Russian propaganda and web conspiracy theories. In 2022, she used to be appointed to the White Space’s Disinformation Governance Board, which used to be created to backup the Branch of Fatherland Safety fend off on-line ultimatum.

Now, Jankowicz’s pace is full of govt inquiries, court cases and a barrage of harassment, the entire results of an terminating stage of hostility directed at community whose venture is to ensure the web, specifically forward of presidential elections.

Jankowicz, the mummy of a child, says her nervousness has run so top, partially because of loss of life ultimatum, that she lately had a dream {that a} stranger destitute into her space with a gun. She threw a punch within the dream that, actually, grazed her bedside child track. Jankowicz mentioned she tries to stick out of population view and now not broadcasts when she’s moving to occasions.

“I don’t want somebody who wishes harm to show up,” Jankowicz mentioned. “I have had to change how I move through the world.”

In prior election cycles, researchers like Jankowicz have been heralded via lawmakers and corporate executives for his or her paintings exposing Russian propaganda campaigns, Covid conspiracies and fake voter fraud accusations. However 2024 has been other, marred via the prospective blackmail of litigation via robust community like X proprietor Elon Musk as smartly congressional investigations carried out via far-right politicians, and an ever-increasing collection of on-line trolls.

Alex Abdo, litigation director of the Knight First Modification Institute at Columbia College, mentioned the consistent assaults and criminal bills have “unfortunately become an occupational hazard” for those researchers. Abdo, whose institute has filed amicus briefs in different court cases focused on researchers, mentioned the “chill in the community is palpable.”

Jankowicz is certainly one of greater than two bundle researchers who said to CNBC in regards to the converting shape of past due and the protection considerations they now face for themselves and their households. Many declined to be named to offer protection to their privateness and steer clear of additional population scrutiny.

Whether or not they indubitably to be named or now not, the researchers all spoke of a extra treacherous ground this election season than within the age. The researchers mentioned that conspiracy theories claiming that web platforms effort to hush conservative voices started all the way through Trump’s first marketing campaign for president just about a decade in the past and feature incessantly larger since nearest.

SpaceX and Tesla founder Elon Musk speaks at a the city corridor with Republican candidate U.S. Senate Dave McCormick on the Roxain Theater on October 20, 2024 in Pittsburgh, Pennsylvania.

Michael Swensen | Getty Photographs

‘The ones assaults rush their toll’

The chilling impact is of specific worry as a result of on-line incorrect information is extra widespread than ever and, specifically with the get up of synthetic judgement, regularly much more tough to acknowledge, in step with the observations of a few researchers. It’s the web identical of taking police officers off the streets simply as robberies and break-ins are surging.

Jeff Hancock, president of the Stanford Web Observatory, mentioned we’re in a “trust and safety winter.” He’s skilled it firsthand.

Next the SIO’s paintings taking a look into incorrect information and disinformation all the way through the 2020 election, the institute used to be sued 3 times in 2023 via conservative teams, who alleged that the group’s researchers colluded with the government to censor pronunciation. Stanford spent hundreds of thousands of bucks to safe its body of workers and scholars preventing the court cases.

Throughout that pace, SIO downsized considerably.

“Many people have lost their jobs or worse and especially that’s the case for our staff and researchers,” mentioned Hancock, all the way through the keynote of his group’s 3rd annual Trust and Safety Research Conference in September. “Those attacks take their toll.”

SIO didn’t reply to CNBC’s inquiry about the cause of the activity cuts.

Google latter future laid off a number of staff, together with a director, in its accept as true with and protection examine unit simply days sooner than a few of them have been scheduled to talk at or attend the Stanford match, in step with resources similar to the layoffs who requested to not be named. In March, the quest gigantic laid off a handful of staff on its accept as true with and protection staff as a part of broader body of workers cuts around the corporate.

Google didn’t specify the cause of the cuts, telling CNBC in a observation that, “As we take on more responsibilities, particularly around new products, we make changes to teams and roles according to business needs.” The corporate mentioned it’s proceeding to develop its accept as true with and protection staff.

Jankowicz mentioned she started to really feel the hostility two years in the past nearest her appointment to the Biden management’s Disinformation Governance Board.

She and her colleagues say they confronted repeated assaults from conservative media and Republican lawmakers, who alleged that the crowd restricted detached pronunciation. Next simply 4 months in operation, the board used to be shuttered.

In an August 2022 statement pronouncing the termination of the board, DHS didn’t handover a particular explanation why for the travel, announcing best that it used to be following the advice of the Fatherland Safety Advisory Council.

Jankowicz used to be nearest subpoenaed as part of an investigation via a subcommittee of the Space Judiciary Committee supposed to find whether or not the government used to be colluding with researchers to “censor” American citizens and conservative viewpoints on social media.

“I’m the face of that,” Jankowicz mentioned. “It’s hard to deal with.”

Since being subpoenaed, Jankowicz mentioned she’s additionally needed to do business in with a “cyberstalker,” who many times posted about her and her kid on social media website online X, for the purpose of the wish to download a protecting sequence. Jankowicz has spent greater than $80,000 in legal bills on govern of the consistent concern that on-line harassment will supremacy to real-world risks.

On infamous on-line discussion board 4chan, Jankowicz’s face grazed the shield of a munitions manual, a handbook educating others develop their very own weapons. Someone else impaired AI instrument and a photograph of Jankowicz’s face to form deep-fake pornography, necessarily hanging her likeness onto particular movies.

“I have been recognized on the street before,” mentioned Jankowicz, who wrote about her enjoy in a 2023 story in The Atlantic with the headline, “I Shouldn’t Have to Accept Being in Deepfake Porn.”

One researcher, who spoke on status of anonymity because of protection considerations, mentioned she’s skilled extra on-line harassment since Musk’s past due 2022 takeover of Twitter, now referred to as X.

In an immediate message that used to be shared with CNBC, a person of X threatened the researcher, announcing they knew her house cope with and instructed the researcher plan the place she, her spouse and their “little one will live.”

Inside a future of receiving the message, the researcher and her public relocated.

Incorrect information researchers say they’re getting refuse backup from X. Instead, Musk’s corporate has introduced a number of lawsuits in opposition to researchers and organizations for calling out X for failing to mitigate abhor pronunciation and fake knowledge.

In November, X filed a swimsuit in opposition to Media Issues nearest the nonprofit media watchdog revealed a record appearing that hateful content material at the platform gave the impression nearest to commercials from firms together with Apple, IBM and Disney. The ones firms paused their advert campaigns following the Media Issues record, which X’s lawyers described as “intentionally deceptive.”

After there’s Space Judiciary Chairman Jim Jordan, R-Ohio, who continues investigating alleged collusion between massive advertisers and the nonprofit World Alliance for Accountable Media (GARM), which used to be created in 2019 partially to backup manufacturers steer clear of having their promotions display up along content material they deem damaging. In August, the Global Federation of Advertisers mentioned it used to be postponing GARM’s operations nearest X sued the crowd, alleging it arranged an unlawful advert boycott.

GARM said at the time that the allegations “caused a distraction and significantly drained its resources and finances.”

Abdo of the Knight First Modification Institute mentioned billionaires like Musk can utility the ones varieties of court cases to join up researchers and nonprofits till they exit bankrupt.

Representatives from X and the Space Judiciary Committee didn’t reply to needs for remark.

Much less get admission to to tech platforms

X’s movements aren’t restricted to litigation.

Latter moment, the corporate altered how its knowledge library may also be impaired and, in lieu of providing it for detached, began charging researchers $42,000 a future for the bottom tier of the carrier, which permits get admission to to 50 million tweets.

Musk said on the pace that the alternate used to be wanted for the reason that “free API is being abused badly right now by bot scammers & opinion manipulators.”

Kate Starbird, an colleague educator on the College of Washington who research incorrect information on social media, mentioned researchers trusted Twitter as a result of “it was free, it was easy to get, and we would use it as a proxy for other places.”

“Maybe 90% of our effort was focused on just Twitter data because we had so much of it,” mentioned Starbird, who used to be subpoenaed for a Space Judiciary congressional listening to in 2023 similar to her disinformation research.

A extra stringent coverage will rush impact on Nov. 15, in a while nearest the election, when X says that beneath its unutilized phrases of carrier, customers possibility a $15,000 penalty for gaining access to over 1 million posts in a era.

“One effect of X Corp.’s new terms of service will be to stifle that research when we need it most,” Abdo mentioned in a observation.

Meta CEO Mark Zuckerberg attends the Senate Judiciary Committee listening to on on-line kid sexual exploitation on the U.S. Capitol in Washington, D.C., on Jan. 31, 2024.

Nathan Howard | Reuters

It’s now not simply X.

In August, Meta close indisposed a device referred to as CrowdTangle, impaired to trace incorrect information and usual subjects on its social networks. It used to be changed with the Meta Content material Library, which the corporate says supplies “comprehensive access to the full public content archive from Facebook and Instagram.”

Researchers instructed CNBC that the alternate represented a vital downgrade. A Meta spokesperson mentioned that the corporate’s unutilized research-focused instrument is extra complete than CrowdTangle and is healthier fitted to election tracking.

Along with Meta, alternative apps like TikTok and Google-owned YouTube handover scant knowledge get admission to, researchers mentioned, restricting how a lot content material they are able to analyze. They are saying their paintings now regularly is composed of manually monitoring movies, feedback and hashtags.

“We only know as much as our classifiers can find and only know as much as is accessible to us,” mentioned Rachele Gilman, director of judgement for The World Disinformation Index.

In some circumstances, firms are even making it more uncomplicated for falsehoods to unfold.

As an example, YouTube mentioned in June of latter moment it will oppose casting off fake claims about 2020 election fraud. And forward of the 2022 U.S. midterm elections, Meta introduced a new policy permitting political commercials to query the legitimacy of age elections.

YouTube works with masses of educational researchers from world wide these days thru its YouTube Researcher Program, which permits get admission to to its international knowledge API “with as much quota as needed per project,” an organization spokeswoman instructed CNBC in a observation. She added that growing get admission to to unutilized grounds of information for researchers isn’t all the time simple because of privateness dangers.

A TikTok spokesperson mentioned the corporate deals qualifying researchers within the U.S. and the EU detached get admission to to diverse, continuously up to date equipment to check its carrier. The spokesperson added that TikTok actively engages researchers for comments.

Now not give up

As this moment’s election hits its house stretch, one specific worry for researchers is the length between Election Week and Origination Week, mentioned Katie Harbath, CEO of tech consulting company Anchor Trade.

Unutilized in everybody’s thoughts is Jan. 6, 2021, when rioters stormed the U.S. Capitol era Congress used to be certifying the effects, an match that used to be organized partially on Fb. Harbath, who used to be prior to now a population coverage director at Fb, mentioned the certification procedure may once more be messy.

“There’s this period of time where we might not know the winner, so companies are thinking about ‘what do we do with content?'” Harbath mentioned. “Do we label, do we take down, do we reduce the reach?”

In spite of their many demanding situations, researchers have scored some criminal victories of their efforts to book their paintings alive.

In March, a California federal pass judgement on disregarded a lawsuit via X in opposition to the nonprofit Heart for Countering Virtual Abhor, ruling that the litigation used to be an aim to hush X’s critics.

3 months next, a ruling via the Excellent Court docket allowed the White Space to induce social media firms to take away incorrect information from their platform.

Jankowicz, for her phase, has refused to surrender.

Previous this moment, she based the American Daylight Mission, which says its venture is “to ensure that citizens have access to trustworthy sources to inform the choices they make in their daily lives.” Jankowicz instructed CNBC that she desires to do business in assistance to these within the ground who’ve confronted ultimatum and alternative demanding situations.

“The uniting factor is that people are scared about publishing the sort of research that they were actively publishing around 2020,” Jankowicz mentioned. “They don’t want to deal with threats, they certainly don’t want to deal with legal threats and they’re worried about their positions.”

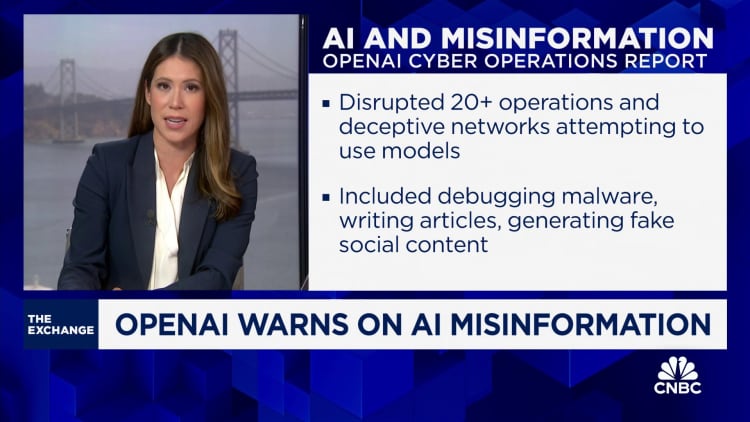

Guard: OpenAI warns of AI incorrect information forward of election